10+ Content Validity Examples to Download

Claims such as the adverse effects that social media inflicted on humans is not really new to us. However, lately, Statista posted that the leading social networking firm, Facebook, conducted a study aiming to lessen the social pressure and competition felt by social media users. As part of the test, they only revealed a few likes on Instagram and Facebook posts. The result of the experiment ranked Instagram as the number one social media channel that harms the mental health of people aged 14 to 24 years. Meanwhile, the test also revealed that YouTube has the most positive impact on the mental state of young people.

Now, aside from the fact that the source of the statistic is well-established, what other factors are your basis that the test is reliable? In the analysis that we mentioned earlier, the researchers considered several factors such as anxiety, depression, loneliness, self-image, harassment, and the opportunity to express oneself to ensure that the data they would get is relevant to the study. As a researcher, it is crucial to cover all the necessary factors to ensure the quality of a test. Content validity of the experiment, along with reliability, fairness, and legal defensibility, are the factors that you should take into account.

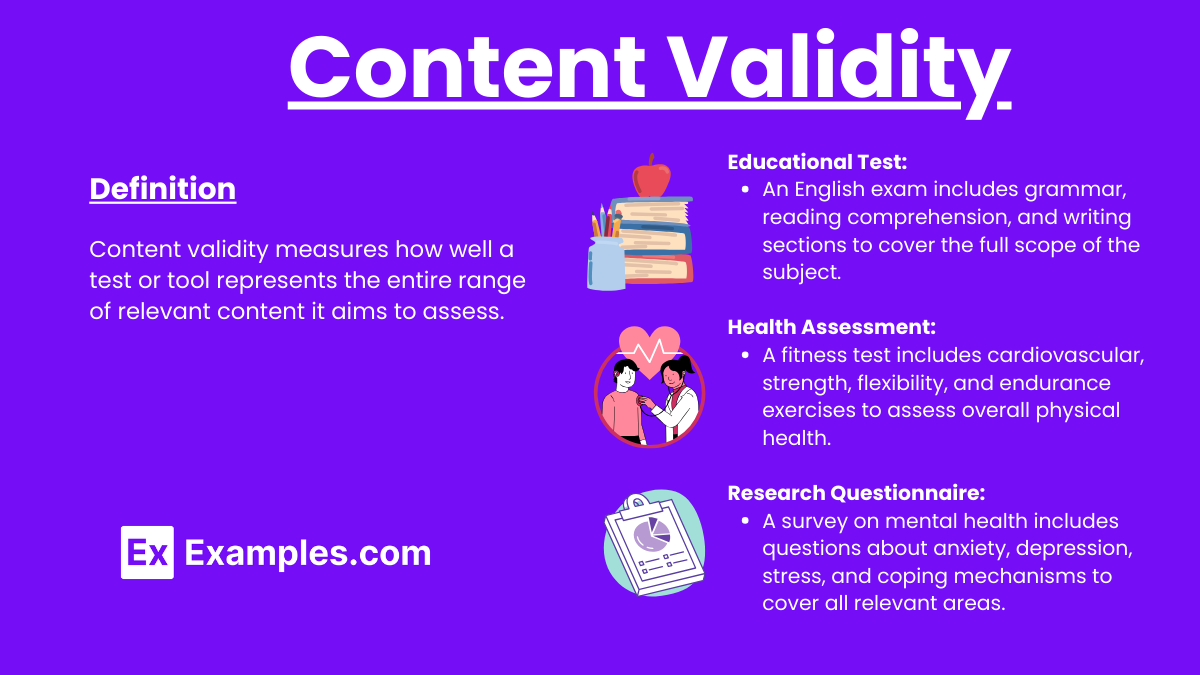

What Is Content Validity?

Content validity refers to the extent to which a test or measurement tool accurately represents the specific domain of content it aims to assess. It ensures that the items within the tool cover all aspects of the concept being measured comprehensively and appropriately.

When is content validity used?

- Educational Testing: Ensuring exam questions cover the full curriculum.

- Psychological Assessments: Validating that test items represent the construct being measured.

- Surveys and Questionnaires: Confirming questions cover all relevant aspects of the topic.

- Job Performance Evaluations: Ensuring evaluation criteria encompass all job duties and skills.

- Health Research: Validating that instruments measure all dimensions of a health condition or behavior.

Content Validity Examples

1. Educational Testing:

- Scenario: A final math exam for a high school algebra course.

- Application: The exam includes questions on all major topics covered during the semester: linear equations, quadratic functions, inequalities, and polynomial operations. By covering all these areas, the exam demonstrates content validity, ensuring that it accurately assesses students’ overall knowledge and skills in algebra.

2. Psychological Assessments:

- Scenario: A new psychological test for measuring depression.

- Application: The test includes items that assess various symptoms of depression: mood changes, sleep disturbances, appetite changes, feelings of worthlessness, and lack of energy. By covering these different aspects of depression, the test has content validity, representing the full spectrum of the disorder.

3. Surveys and Questionnaires:

- Scenario: A customer satisfaction survey for a retail store.

- Application: The survey includes questions on various dimensions of the shopping experience: product quality, store cleanliness, staff helpfulness, pricing, and overall satisfaction. Ensuring that all these dimensions are included gives the survey content validity, providing a comprehensive assessment of customer satisfaction.

4. Job Performance Evaluations:

- Scenario: A performance review for a project manager.

- Application: The evaluation form includes criteria related to project planning, team leadership, communication skills, budget management, and meeting deadlines. By evaluating all these key areas, the performance review demonstrates content validity, accurately reflecting the project manager’s job responsibilities and performance.

5. Health Research:

- Scenario: A quality of life questionnaire for patients with chronic pain.

- Application: The questionnaire includes items that assess physical functioning, pain severity, emotional well-being, social interactions, and daily activities. Covering these different dimensions ensures content validity, capturing the full impact of chronic pain on the patient’s quality of life.

6. Employee Training Programs:

- Scenario: An assessment tool for evaluating the effectiveness of a customer service training program.

- Application: The tool includes questions and tasks related to different aspects of customer service: communication skills, problem-solving abilities, product knowledge, and handling difficult customers. Ensuring that all these aspects are covered gives the assessment content validity, accurately evaluating the training program’s effectiveness.

7. Medical Licensing Exams:

- Scenario: A licensing exam for medical practitioners.

- Application: The exam includes sections on various areas of medical knowledge and practice: anatomy, physiology, pharmacology, clinical diagnosis, and patient care. By covering all these essential areas, the exam demonstrates content validity, ensuring that it comprehensively assesses the candidate’s competence to practice medicine.

8. Employee Selection Tests:

- Scenario: An aptitude test for hiring software developers.

- Application: The test includes questions on coding skills, problem-solving abilities, understanding of algorithms, and software development principles. By ensuring that these areas are covered, the test has content validity, accurately evaluating the candidates’ suitability for the software developer role.

If you are looking for documents where you can apply a content validity approach, you should check this section of the article. Utilizing a content validity approach to research and other projects can be complicated. Thus, we included a number of documents that you can use as a reference or a guide on applying the said method to your project.

1. Content Validity Evidence in Test Development Template

2. Face and Content Validity Evaluation for Instructional Technology Competency Instrument Example Template

3. Content Validation in Personnel Assessment Template

4. Face and Content Validity of a Teaching and Learning Guiding Principles Instrument Evaluation Template

5. Questionnaire Content Validity Example

6. Examination Content Validity Example Template

7. Investigating Content and Face Validity of A Placement Test

8. Psychological Assessment Content Validity Template

9. Questionnaire Content Validity and Test Retest Reliability Template

10. Content Validation in Assessment Decision Guide

11. Qualitative Research Validity Template

Difference Between Content Validity and Face Validity

| Aspect | Content Validity | Face Validity |

|---|---|---|

| Definition | The extent to which a test or measurement tool covers all aspects of the concept it aims to measure. | The degree to which a test appears to measure what it claims to measure at face value. |

| Depth of Evaluation | In-depth and comprehensive evaluation of content coverage. | Superficial and subjective evaluation based on appearance. |

| Assessment Method | Typically assessed by experts in the field who evaluate the content domains. | Often judged by non-experts or users based on their initial impression. |

| Purpose | Ensures the test comprehensively covers the relevant content areas for accurate measurement. | Ensures the test looks like it measures the intended construct, aiding acceptance by test-takers. |

| Importance | Critical for the overall validity and reliability of the test. | Important for the perceived credibility and acceptance of the test. |

| Evaluation Process | Involves detailed reviews, blueprints, and often pilot testing to confirm coverage. | Involves simple visual inspection and immediate judgment about the test’s relevance. |

| Example | An exam for a medical course covering all required knowledge areas, such as anatomy, physiology, and pharmacology. | A survey on job satisfaction that looks like it measures various aspects of job satisfaction, such as work environment and pay. |

| Expert Involvement | High – requires input from subject matter experts. | Low – can be judged by laypersons or end-users. |

| Outcome | Produces a tool that is comprehensive and thorough in its measurement. | Produces a tool that is readily accepted and trusted by users based on appearance. |

Why is content validity important?

- Comprehensive Measurement:

- Ensures that the assessment tool covers all relevant aspects of the construct, leading to a more accurate and thorough evaluation.

- Accuracy and Reliability:

- By including all necessary content areas, the tool provides a reliable measure of the construct, reducing the risk of missing critical information.

- Enhanced Credibility:

- A test with high content validity is more credible and acceptable to stakeholders, including test-takers, educators, and researchers.

- Foundation for Construct Validity:

- Content validity is a foundational step in establishing overall construct validity, ensuring the test measures what it is intended to measure.

- Improved Decision-Making:

- Accurate and comprehensive assessments lead to better-informed decisions, whether in educational settings, clinical diagnoses, or organizational evaluations.

How to Establish Content Validity Evidence

Aside from the examples, we also included the following instructions, which you can use to establish content validity in your project research successfully.

1. Define the Purpose of a Test

Through this step, you have to determine the reasons why a test is necessary. You also have to list down who will take the test and how the test will go. After that, you will have to determine what interpretations you are going to use based on the results of the test you are going to take.

For example, you intend to measure the satisfaction rate of the customer service your business provides to the customers. It will serve as the purpose of the test that you are going to conduct. After that, you can decide whether you will provide online forms to your customers with specific questions. You can also invite them for a quick interview, but this process can be inconvenient for some customers. Thus, you also have to consider a few factors when deciding the most appropriate method to approach your participants.

2. Develop the Test

Once you have clearly stated the purpose of the test, you can start the development of the test as outlined. During the development process, you have to follow the purposes of the analysis to ensure that the test will generate the data that you need for evaluation.

3. Execution

In this step, you will execute the test that you have developed. To accurately measure the effectiveness of the test, you have to make sure that you will only follow the plan that you have developed. For example, you have included in the development of your test that you will get the necessary information by providing your customers with surveys with specific questions.

4. Evaluation

If the construction is done right, you can assure that the test is measuring the correct subject area. Through this step, you can conclude if the test generated the intended data. You can also determine if the analysis serves the purposes that you listed during the first step of the content validity evidence establishment.

Step-by-step guide: How to measure content validity

Step 1: Define the Construct

- Objective: Clearly define the concept or domain you aim to measure.

- Action: Create a detailed description of all aspects and dimensions of the construct. For example, if measuring “job satisfaction,” identify subdomains like work environment, compensation, and job role.

Step 2: Develop a Content Blueprint

- Objective: Ensure comprehensive coverage of the construct.

- Action: Outline a blueprint or framework that lists all areas and sub-areas of the construct. This helps in mapping out what the test or assessment should include.

Step 3: Generate Test Items

- Objective: Create items that cover the entire domain of the construct.

- Action: Write questions or tasks for each area identified in the blueprint. Ensure a balanced representation of all subdomains.

Step 4: Engage Subject Matter Experts (SMEs)

- Objective: Validate the relevance and coverage of the test items.

- Action: Assemble a panel of experts in the field related to the construct. Provide them with the blueprint and test items for review.

Step 5: Conduct Expert Review

- Objective: Assess the adequacy of content coverage.

- Action: Have the experts evaluate each item for relevance, clarity, and representativeness. They should rate items based on how well they cover the specific aspects of the construct.

Step 6: Calculate Content Validity Index (CVI)

- Objective: Quantify the level of agreement among experts.

- Action: Use a scale (e.g., 1 to 4) for experts to rate each item. Calculate the proportion of items rated as relevant (e.g., 3 or 4) by all experts. The CVI can be calculated at both the item level (I-CVI) and the scale level (S-CVI).

- Item-Level CVI (I-CVI): I-CVI=Number of experts rating item as relevant/Total number of experts

- Scale-Level CVI (S-CVI): S-CVI=∑I-CVI/Total number of items

Step 7: Revise Based on Feedback

- Objective: Improve the test based on expert input.

- Action: Revise or remove items with low CVI scores. Add new items if necessary to cover any gaps identified by the experts.

Step 8: Pilot Testing

- Objective: Test the revised assessment for practical application.

- Action: Administer the test to a small, representative sample of the target population. Gather feedback on item clarity and relevance.

Step 9: Analyze Pilot Data

- Objective: Validate the effectiveness of the revisions.

- Action: Analyze the pilot test results to identify any remaining issues with item clarity or coverage. Make final adjustments as needed.

Step 10: Finalize the Assessment Tool

- Objective: Ensure the assessment tool is ready for use.

- Action: Incorporate all feedback and finalize the items. Ensure the test now has strong content validity, covering all aspects of the construct comprehensively.

What is content validity vs criterion validity?

Content validity ensures a test covers all aspects of the construct, while criterion validity measures how well one test predicts outcomes based on another established test.

What is an example of construct and content validity?

Construct validity: A depression scale accurately measures depression. Content validity: A math test includes questions on all topics covered in the curriculum.

What is content validity of an item?

Content validity of an item refers to how well a specific question or task represents the domain it aims to measure within the overall construct.

How do you determine content validity?

Determine content validity by defining the construct, creating a content blueprint, generating items, and having experts review and rate the relevance of each item.

What is another name for content validity?

Another name for content validity is “logical validity.”

Why use content validity?

Use content validity to ensure that an assessment tool comprehensively and accurately measures all aspects of the intended construct, enhancing its overall reliability and effectiveness.

How do you assess validity of content analysis?

Assess validity of content analysis by ensuring that the coding scheme captures all relevant themes, and by having multiple coders review and agree on the categorization of content.

Is content validity good?

Yes, content validity is essential for ensuring that a test or assessment tool accurately represents the full domain of the construct it aims to measure.