9+ Discriminant Validity Examples to Download

Data and statistics can seem like hard and cold evidence to prove our point. However, numbers don’t tell the whole story. We may have to run tests and data analysis on non-quantitative concepts. Unlike numbers, it can be harder to analyze and study these constructs. How do we make sure that our methods and test actually evaluate a specific non-numerical construct? Discriminant validity is a measurement of the degree to which a test or scale differs from another in what they are assessing. It is vital in social and behavioral sciences, like psychology, to avoid ambiguity in the research findings.

We don’t exist in a binary world where everything can be simplified and isolated into ones and zeroes. If we are even to begin understanding the sheer volume of intricacies involved, we need to narrow into different constructs one at a time. By checking for the construct validity in a study, we can evaluate if we are, in fact, examining only the concept in question. Both convergent and discriminant validity nestle under the encapsulating shade of construct validity. When we regard a test with high construct validity, we say that it measures what it was designed to measure. This means that the test is both convergently and discriminantly valid. Convergent validity looks at how similar the related tests are. On the other hand, discriminant validity is concerned with how unrelated are the distinct tests are.

Validity In Research

As we broaden our knowledge with the help of research papers, we are refining the available information and its consequences in a variety of fields. The paradigm of mental health, and health in general, benefit from these system updates and upgrades. More than 3.5 million people in the US suffer from schizophrenia. Researchers may have reason to suspect that a fraction of that statistic has been misdiagnosed. Schizophrenia is a mental disorder that causes people not to be themselves. The symptoms include psychosis, impaired cognitive functions, and loss of motivation. However, people with an immune system disorder that affects the NMDA receptors of the brain can also display schizophrenic symptoms. We can treat the latter with immunotherapy treatments.

Considering this information, we can redefine our idea of the mental disorder to diagnose and treat it better with updated tests. We can evaluate if we are indeed assessing the concept with the right angle and perspective. We can come up with more conclusive results when our research is based on a valid look at the construct. Matters, like this, are complicated, and quantifying them into something measurable is challenging. When two constructs are theoretically different, then our tests for both should also be distinct from each other.

9+ Discriminant Validity Examples

Discriminant validity, as a subset of construct validity, will assure researchers that the test, assessment, or method used in evaluating the non-numerical construct is appropriate and applicable in the given context. This branch of validity operates under the principle that tests and methods shouldn’t overlap when the constructs they are meant to measure aren’t related in theory. The following are examples of discriminant validity as used in research and related literature.

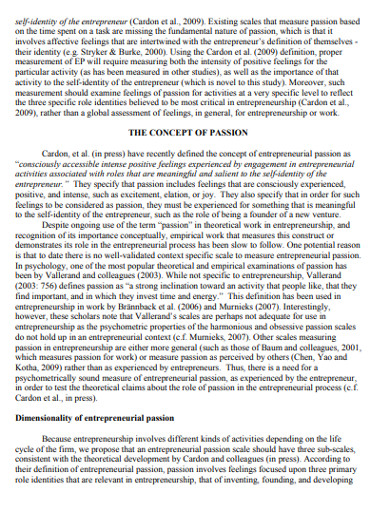

1. Discriminant Validity of Entrepreneurial Passion Example

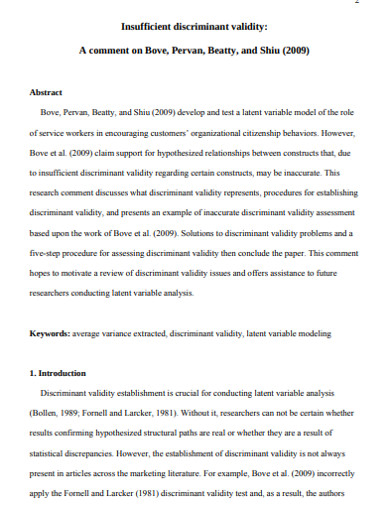

2. Insufficient discriminant validity Example

3. Discriminant Validity of Perceptual Incivilities Measures

4. Discriminant Validity Assessment Example

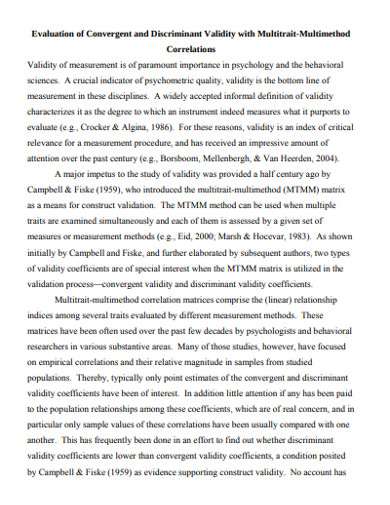

5. Evaluation of Discriminant Validity Example

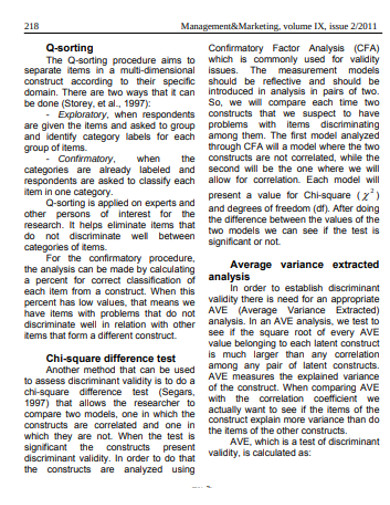

6. Basic Discriminant Validity Example

7. Construct and Discriminant Validity Example

8. Formal Discriminant Validity Example

9. Discriminant Validity and Cross-Validity Assessment

10. Discriminant Validity Template

Improving Discriminant Validity

There are numerous methods to measure discriminant validity. Evaluating the correlation coefficient of scales is one. Using such technique, you must show little to no correlation between the two scales to claim that the research has discriminant validity. The correlation value ranges from -1 to 1. Here, you must have a correlation coefficient of 0 or close. Remember, negative integers don’t show a lack of correlation but inverse relationship between the scales. How do you improve the discriminant validity of research methods so that it would reflect its theoretical distinctiveness?

1. Definitions Are Important

Unclear, broad, and ambiguous definitions of what you want to measure threaten the construct validity of your research method. Therefore, you have to know exactly the construct as well as its relationship with other constructs. They are related but some aspects of the identity of the concept will differentiate it from others. You have to thoroughly think about the concept you are investigating. Specify the observed manifestations of that abstract idea that will make it measurable in the real-world, such as symptoms of a disease for its severity or the EIQ for the emotional intelligence of a person.

2. Use Multiple Measures

Don’t ignore the multiple manifestations of a construct. Mono-operation bias is a threat to the construct validity of research methods. By using only a single treatment in our research, we limited our understanding and potential insights that we could have gleaned from the study. Unless we specifically wanted to study a certain condition’s effect, we should optimize our methods so that it includes other treatments. Even though we narrow our focus on a single construct, we should consider that it can manifest in a variety of ways.

3. Avoid Hasty Generalizations

In relation to the previous tip, subscribing only to a single treatment or method can lead to hasty generalizations. For example, you may have concluded that something has a negative or positive effect, but you only tested one treatment. Maybe the treatment’s efficacy is proportional to the amount of input made. It can also be that you missed observations of other consequences of an activity or interaction. You should consider using multiple treatments, and be diligent in noting details. Not only will you gather more information about a construct, you can also avoid this mistake in deduction.

4. Reduce Hawthorne Effect

Unless your research calls for it, look for ways that you can avoid inducing the Hawthorne effect during your research when dealing with human participants. There are instances when people try to impress their observers by “performing” the role given to them. They may be more submissive to the attending researcher or respond with reactions that they think the researchers want to get from them. These unnatural responses may skew your data and misrepresent the construct you are studying on.