How many milliseconds are in 1,000 microseconds?

0.1 ms

1 ms

10 ms

100 ms

A microsecond is a unit of time in the International System of Units (SI) that equals one millionth of a second. It is symbolized as µs. This very short time frame is particularly important in fields such as physics, engineering, and computing, where extremely quick processes need to be measured or timed. For example, microseconds are commonly used to gauge the speed of electronic circuits, the timing of signals in telecommunications, and various scientific experiments where precise time measurement is critical.

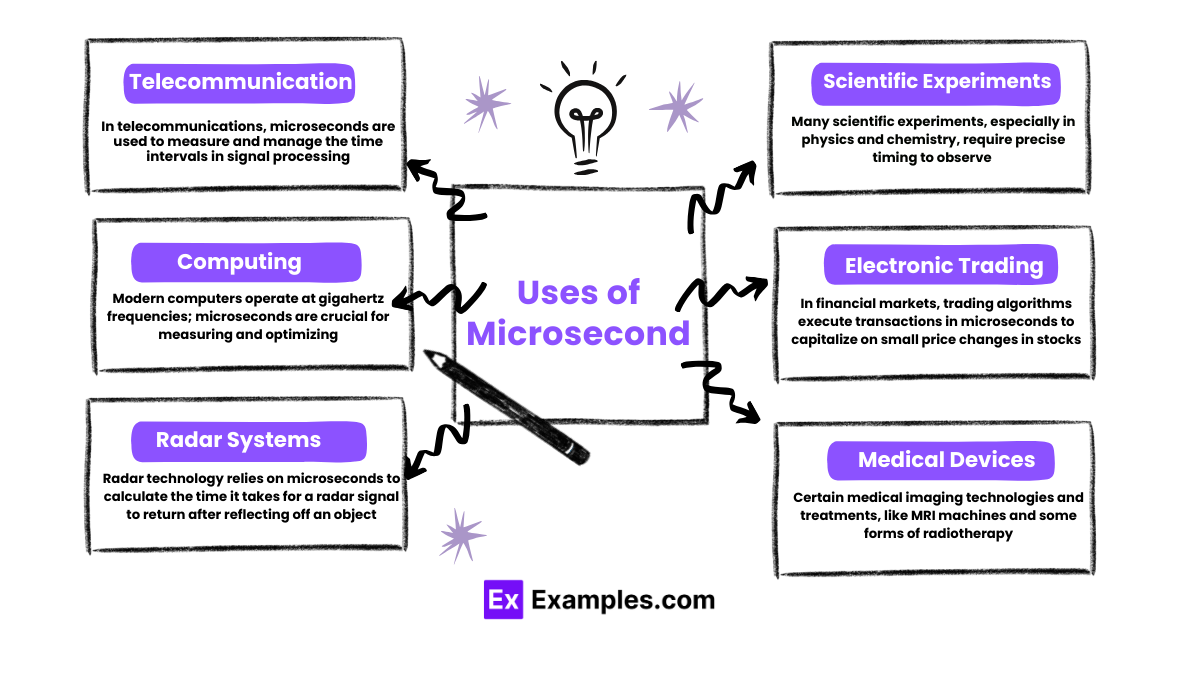

This brief interval is critical in various technological and scientific settings, where high precision is required to observe, measure, and manage very fast phenomena. Microseconds are extensively used in telecommunications to assess signal timings, in computing to optimize processor operations, and in scientific research to time fast chemical and physical reactions, showcasing the vital role they play in modern science and technology.

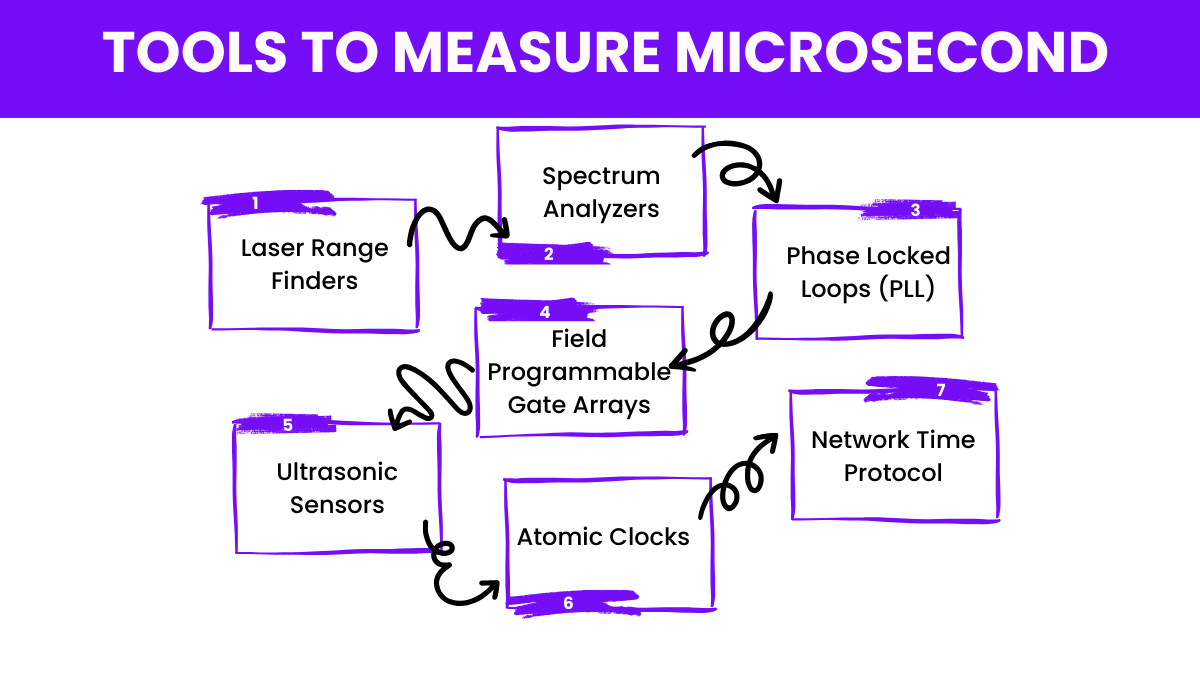

Measuring time intervals as short as microseconds requires precise and specialized tools. Here are some tools commonly used to measure microseconds:

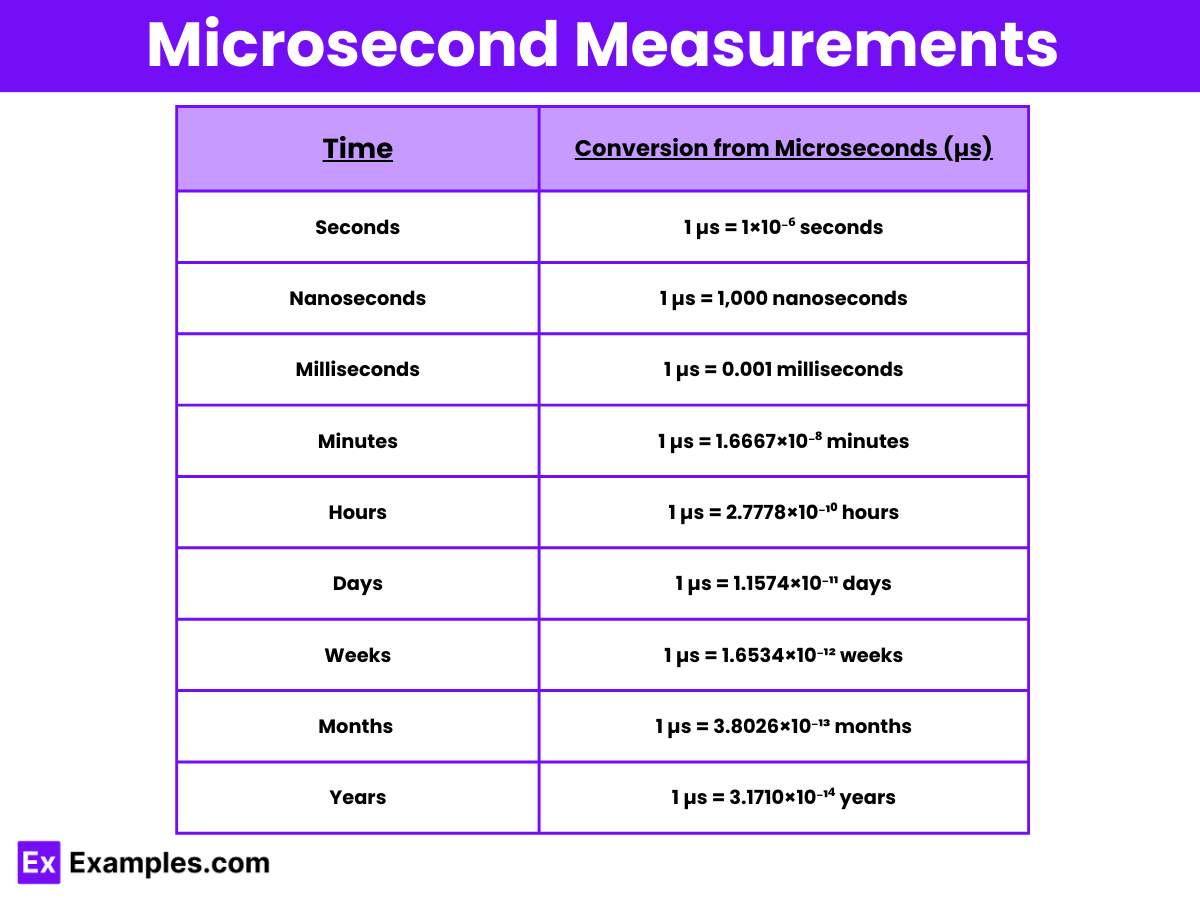

Here’s a table showing the conversion of microseconds to other common units of time:

| Time Unit | Conversion from Microseconds (µs) |

|---|---|

| Seconds | 1 µs = 1×10⁻⁶ seconds |

| Milliseconds | 1 µs = 0.001 milliseconds |

| Nanoseconds | 1 µs = 1,000 nanoseconds |

| Minutes | 1 µs = 1.6667×10⁻⁸ minutes |

| Hours | 1 µs = 2.7778×10⁻¹⁰ hours |

| Days | 1 µs = 1.1574×10⁻¹¹ days |

| Weeks | 1 µs = 1.6534×10⁻¹² weeks |

| Months (average) | 1 µs = 3.8026×10⁻¹³ months |

| Years | 1 µs = 3.1710×10⁻¹⁴ years |

Understanding how to convert microseconds to other units of time is essential when dealing with various measurement systems, particularly in fields like electronics, communications, and computing, where precise timing is crucial. Here’s a straightforward guide to converting microseconds to and from other common units of time:

Microseconds, each one a millionth of a second, are crucial in various scientific, technological, and practical applications where precision timing is essential. Here are some prominent uses of microseconds:

One microsecond is the time it takes for light to travel approximately 300 meters in a vacuum or about 984 feet. It also represents a typical microwave’s oscillation period.

Ten microseconds is a time period equal to ten millionths of a second. It is a very short duration commonly used in scientific and technological measurements for high precision.

Smaller than a microsecond are the nanosecond, picosecond, femtosecond, attosecond, zeptosecond, and yoctosecond, each progressively finer measurements of time used in scientific and technological applications.

Text prompt

Add Tone

10 Examples of Public speaking

20 Examples of Gas lighting

How many milliseconds are in 1,000 microseconds?

0.1 ms

1 ms

10 ms

100 ms

How many microseconds are in 0.002 seconds?

20

200

2000

20000

A light pulse lasts for 500 microseconds. How many milliseconds does it last?

0.05 ms

0.5 ms

5 ms

50 ms

Which is longer, 1 millisecond or 750 microseconds?

1 millisecond

750 microseconds

Both are equal

Cannot be determined

How many nanoseconds are in 1 microsecond?

100

1000

10,000

1000,000

What is the frequency of an event that occurs every 250 microseconds?

4 Hz

40 Hz

400 Hz

4,000 Hz

If a computer operation takes 750 microseconds and another takes 1.2 milliseconds, what is the total time taken in microseconds?

1,950 microseconds

1,200 microseconds

750 microseconds

1,700 microseconds

How many seconds are in 500,000 microseconds?

0.5 seconds

5 seconds

50 seconds

500 seconds

A camera shutter speed is set to 250 microseconds. What is the equivalent speed in milliseconds?

0.025 ms

0.25 ms

2.5 ms

25 ms

A light sensor records data every 100 microseconds. How many records does it collect in 1 second?

10

100

1000

10,000

Before you leave, take our quick quiz to enhance your learning!